It Could Happen (T)here: Transnational Advocacy Strategies Around Social Media

Invited workshop brings activists, scholars, and practitioners together from across disciplines, sectors, and countries.

This post was originally published on January 20, 2022 to RSM’s former Medium account. Some formatting and content may have been edited for clarity.

Social media facilitates engagement across cultures and time zones, and its impacts are global. When those impacts are negative, the harm — like all harms — is felt locally, and personally. But while the specifics are local, the harms can often be mirrored by similar effects elsewhere. This has proven a challenge to research and advocacy, as enormous, U.S.-based social media companies have been slow to register their global roles and react to severe impacts.

How might we better connect the experiences and negative impacts that social media has had around the world? How can we use those connections to advance advocacy, research, and regulatory efforts?

On December 2, the Berkman Klein Center’s Institute for Rebooting Social Media hosted an invited workshop to explore these questions with over 30 experts from six continents across academia, civil society, government, and industry. Participants were invited to identify transnational social media patterns across three key workstreams: 1) digital authoritarianism, 2) surveillance, and 3) propaganda. Participants were divided into three workstream groups. Each group met twice: first, to identify and explore key problems in the space, and second, to brainstorm potential research questions, projects, or advocacy campaigns that could address some of those problems.

The workshop’s foundational framework came from the paper Facebook’s Faces by Workshop Co-host Chinmayi Arun, who presented the work on power dynamics within and among social media companies and users along with slides illustrated by Hanna Kim.

Professor Arun’s paper highlights that social media companies are large and diverse organizations with internal teams working on objectives that are, at times, in tension with one another. Different user groups and states possess varying amounts of privilege, political power, and ability to force or inspire change, so coalitions and alliances across sectors and power valences are particularly important to making change in the social media space.

1) Digital Authoritarianism — regressing democracies, sanctions, platform authoritarianism, rising threats around mental health and collective attention spans, and risks to local platform employees

Workshop Co-host & Facilitator: Jessica Fjeld

The goal of this group was to think about the power dynamics in digital environments that are controlled by authoritarian regimes. The power of social media can be leveraged both to disrupt and to reinforce prevailing power structures, whether democratic or authoritarian. As democracies face a more contested online sphere, leaders in many autocratic regimes have looked to digital media to increase their power.

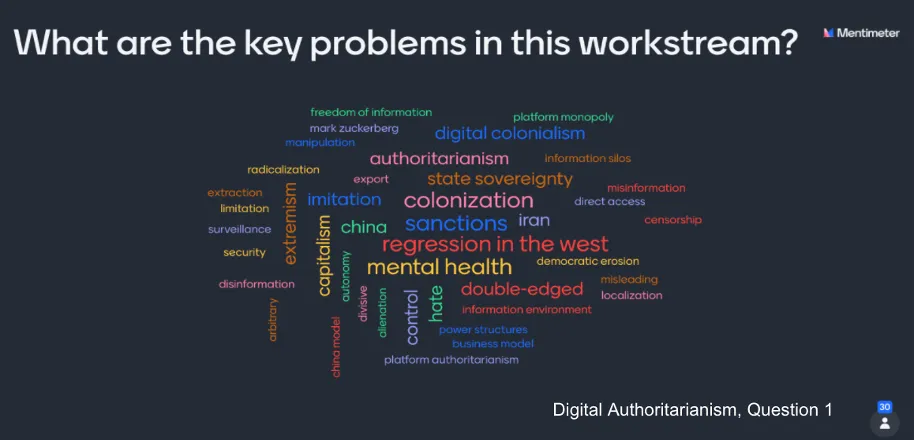

Some key problems of digital authoritarianism: overcompliance with sanctions, platform authoritarianism, resulting mental health challenges

In the first breakout session, participants identified a wide range of high-level problems (see figure), settling on three key problems to discuss further: sanctions, platform authoritarianism, and the resulting mental health challenges.

The conversation began with the shared understanding of increased democratic regression globally, and particularly in “Western” countries, with the rise of “strongman” leaders contributing to the spread of digital authoritarianism. One participant mentioned that social media platforms that used to be seen as champions of rights, particularly free expression, are now seen as the “bad guys,” and another mentioned that while the U.S. was previously considered a democratic champion internationally, now its own democracy is seen to be at risk. We need new kinds of champions across sectors and through alliances.

Efforts to combat authoritarianism outside the digital sphere also have impacts within it: government sanctions can have devastating consequences for ordinary people, including their ability to access technology. In particular, the group discussed how the United States’ sanctions against Iran have denied ordinary Iranians access to technology, from chat apps to web hosting. Some of the impacts stem from overcompliance on the part of many tech companies. For example, in 2018, Slack took down down accounts of Iranian expats who should have been able to use the service.

Importantly, the group also highlighted the issue of “platform authoritarianism.” We tend to think about authoritarianism in terms of states, yet the design, policy, and behaviors of certain social media and technology companies can be considered authoritarian. Over time, many platforms have become gatekeepers. How can we reimagine social media platforms to be designed more democratically to support positive, flourishing civil societies?

Finally, authoritarianism is fed by the exhaustion of our collective attention spans and takes a toll on the mental health of people trying to resist, imagine, and create new futures, increasing the likelihood for such regimes to emerge in democratic or semi-democratic countries. Here, the group also highlighted how there are psychological challenges that come with living in our current COVID era, which make it even more challenging to tackle multiple simultaneous systemic threats.

Possible research questions, advocacy campaigns, and projects: an advocacy campaign for democratic governments to defend employees of platform companies

The group had numerous ideas, including building the conversation on U.S. tech platforms’ overcompliance with Iran sanctions; more scholarship on how capitalism can exert a similar force to authoritarianism in shaping technologies and services; and increased transparency from both platforms and governments on Internet censorship and surveillance.

One idea that the group discussed at length was launching an advocacy campaign for democratic governments to defend employees of platform companies. In the long term, to ensure they are providing safe and rights-protective services, social media companies need people on the ground in the countries where they operate. Yet, such local employees are increasingly being used as a tool to exert pressure by governments around the world. The group suggested that democratic governments could consider using their influence to advocate for the safety of employees who work on the ground in at-risk jurisdictions, much as they already do for journalists in similar situations. Governments protect journalists because journalists’ work is key to free expression and access to information; in a social media era, the same is true of platform employees in some situations. This idea was inspired by Chinmayi Arun’s paper, in that it acknowledges that tech companies and governments have multiple points of engagement, beyond those that digital rights advocates routinely focus on.

2) Surveillance — increasing transparency, understanding threats of digital vs. manual surveillance, limiting the sale of surveillance tech, and examining tradeoffs around use of surveillance and data to investigate criminal cases

Workshop Co-host & Facilitator: Paola Ricaurte Quijano

The second group explored the implications of datasets created by social media companies and how states have used them to enhance their surveillance strategies against journalists and dissidents. The secrecy imposed around national security can make it difficult to understand how states access and use data. This has created challenges for constitutional and legal frameworks across the world.

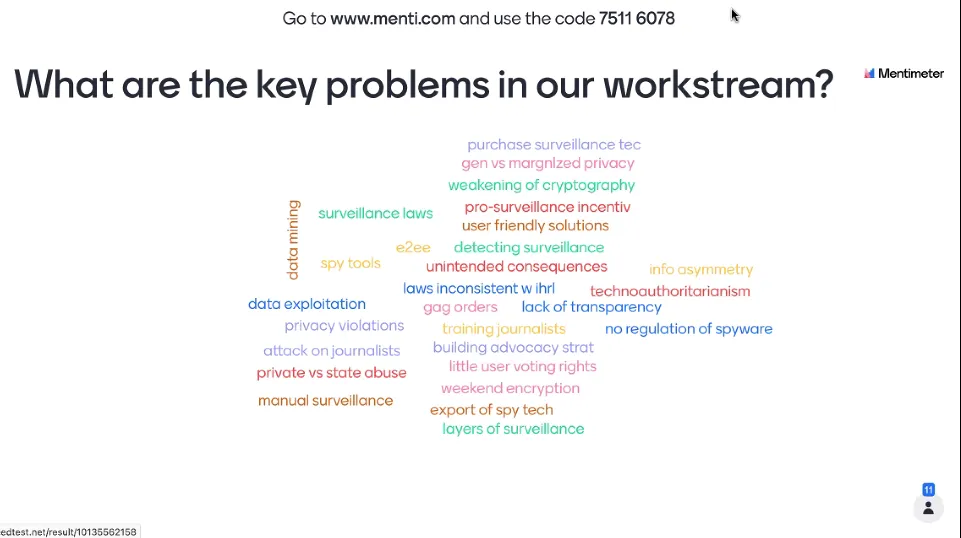

Some key problems of digital surveillance: lack of transparency, understanding digital vs. manual surveillance risks, tradeoffs of accessing “surveillance data” for criminal cases, lack of regulation on selling surveillance tech

One of the main problems is a lack of transparency about how and what data are being collected about people and how that data can be used to surveil. In turn, this affects people’s understanding of how to counterbalance and fight against these mechanisms, especially when surveillance impacts the most disadvantaged groups.

Participants argued that surveillance technologies — like Pegasus software — have a significant negative impact on human rights and democratic values. For example, they are being used by authoritarian states against dissidents. Yet, it’s important to understand the differences between truly fully digital surveillance and more manual surveillance, such as checking people’s devices. These differences have a direct impact on disadvantaged groups and the strategies they would use to protect themselves and their communities.

There are strong relationships between increased surveillance and risks to human rights. For instance, there is a huge problem with using data for criminal purposes in Brazil. Notably, the judiciary does not understand how many technologies work. Therefore, we need to think about how to investigate these cases and access data for use in criminal cases, without breaking encryption and without encouraging judicial overreach that might later be used to target advocates.

In general, participants noted that encryption and surveillance have a push-and-pull relationship. While encryption can be absolutely key to protect the communications of human rights defenders, journalists, and dissident voices, it can also facilitate the transmission of hate speech at scale. As a consequence, encryption has to be tailored and thoughtfully deployed.

Further, participants emphasized the need for more robust regulations to curb the sale and sharing of surveillance technologies. For example, France is exporting spy technology to other regimes. For that reason, it is essential to raise awareness through advocacy campaigns. As an alternative, transparent and user-friendly solutions are necessary so everyone can understand how surveillance occurs and take measures to protect themselves.

Possible research questions, advocacy campaigns, and projects: analyzing both the state of encryption technologies and the current policies to combat overreach of surveillance

First, how might we analyze the state of encryption technologies? What technologies are in use? Where? Which might be legitimate surveillance technologies that society requires, and which are overreach?

Second, what current policies exist to combat overreach of surveillance or to encourage surveillance? How do companies react to these policies? This kind of research will allow for community-based responses with less intrusive effects and could help to improve the human rights impact that these technologies have.

3) Propaganda — platforms determining which publics to engage with, regulating political advertising, and determining accountability for disinformation

Workshop Co-host & Facilitator: Chinmayi Arun

The third group discussed the challenges in tackling misinformation spread by information operations amplified on social media in different parts of the world. Governments and politicians in many countries have used social media platforms to spread propaganda, causing or amplifying harm by manipulating citizens’ information environments. Private, global platform companies must wrestle with design, policy, fact-checking, and content moderation decisions that are the definition of high-profile and high-stakes.

Some key problems of “propaganda”: conceptualizing who is in the center vs. periphery, difficulty of intervening around political advertising, determining accountability for disinformation

In the first breakout session, the group highlighted key issues, such as dealing with misinformation spread through private messaging applications and the role of the government in curbing online misinformation. Finally, three interconnected issues were identified as being the biggest and most urgent challenges: center vs. periphery, political advertising, and determining accountability for disinformation.

The center vs. periphery issue is how to conceptualize the publics in a country, and which are in the periphery affected by those with the power to spread and control misinformation. Susan Benesch from the Dangerous Speech Project stated that the key point is understanding who platforms should engage with to reduce mis- and disinformation effectively. Companies’ access to markets is generally mediated through governments — but those are often major sources of disinformation. Publics have a stake in the way that social media platforms are run in a very different way to states, and some of the public are more disempowered than others, despite being the voices that should be heard. This is especially critical for the Global South.

The mention of the Global South triggered a conversation on political advertising. Governments rely on social media platforms for their political campaigns, but there are different interventions in elections by platforms. The group touched upon the role of the state in creating propaganda, highlighting the difficulty of extrapolating lawful political advertising from propaganda. Some noted how, in India, there is also a problem where state actors ally with non-state actors and blur the lines of political advertising.

This led to the question of determining accountability for disinformation. The group was interested in how to organize disempowered publics in strategic ways and in approaches to accountability for misinformation beyond platform accountability. It is difficult to pin down the people responsible and complicit in creating propaganda, especially when states are involved, but coalitions aimed at finding accountability may be an important avenue to explore to dismantle information operations more effectively.

Possible research questions, advocacy campaigns, and projects: better illuminate the connections between adtech and propaganda, observe how political advertising targets digital workers in election processes

Two relevant projects were proposed in response to the issue of political advertising. One idea was to better illuminate the connection between adtech and propaganda in different regimes and election processes. Another was to observe whether and how political advertising in various elections and advocacy campaigns targets digital workers in the election process. The group discussed how ad targeting has largely been viewed from a Global North perspective, and how platforms enforce different frameworks on election transparency, citing platforms’ treatment of elections in Brazil and the Philippines as examples. Susan Benesch added that diasporas are an oft-overlooked contributor to election propaganda in their countries of origin, a complexity that should be considered more carefully.

Benesch also suggested conducting a transnational, comparative study of government troll farms to investigate accountability for disinformation in such information operations. The group also discussed Jessica Dheere from Ranking Digital Rights’ idea on different theories of change advocacy strategies to counter corporate authoritarianism. She proposed a field manual on how to conduct corporate advocacy and investor advocacy, which is different from government advocacy, which is more typical for many advocacy organizations.

Other suggested topics included exploring the representation of whistleblowers as unreliable narrators, digging into the power dynamics between center/periphery and national/transnational actors, and global commissions on propaganda awareness and mitigation strategies with local chapters.

Looking ahead

Participants were energized and challenged by the discussions in the workshop, and left with ideas to use in their own work, as well as to share with others. As the Institute for Rebooting Social Media moves forward, these ideas — and in particular the transnational perspective brought by participants in this workshop — will be key.

This write-up was written by Amanda Besar, Jennifer Li, and Valentina Vera Quiroz. The workshop was organized by Chinmayi Arun, Jessica Fjeld, Paola Ricaurte Quijano, and Hilary Ross and advised by James Mickens and Jonathan Zittrain, with research assistance from Amanda Besar, Jennifer Li, and Valentina Vera Quiroz. Participants joined the workshop under a modified version of the Chatham House Rule — people could indicate that they would like their comments attributed — inspired by Kendra Albert’s The Chatham House Should Not Rule.